Crispin Hitchings‑Anstice

Contents:My work is in developing technologies to create and facilitate artistic works. My current focus is in development of musical instruments and tools and DSP programming, and I also create interactive musical programs that use techniques and algorithms from graphics programming to drive the musical or visual elements. I have a Bachelor of Science in Computer Graphics and a Bachelor of Music in Sonic Arts and Music Technology, both from Victoria University of Wellington, and I am currently completing a Bachelor of Music with Honours which allows me to study and combine creative and technical practice.

Modal Synthesiser

| home | code | download | presentation pptx pdf | discuss |

As part of my Honours study I developed a synthesiser plugin using modal synthesis, a physical modelling technique using resonators tuned to a harmonic series. The design of the synthesiser draws from techniques used in the spectralism compositional movement. I developed the synthesiser using C++ and the the Juce framework as a VST/AU plugin.

To test and demonstrate the synthesiser I have composed three études that demonstrate its features and use it in a full music production environment.

Interference Patterns and Phasing Sequencer

This piece draws from concepts of minimal music, particularly ‘phasing’ as used by Steve Reich and other artists. A short phrase is played against a copy of itself, off set and shifting in time, revealing different interactions between the two phrases — a musical moiré effect. In creating this I studied many works that use phasing, examining how they are structured. I use this research to create a sequencer in the Max programming environment that performs the phasing, and this sequencer is the centre of the live electronics that realise the piece. I composed this piece as part of my Honours study, supervised by Mo Zareei. Finalist in the 2024 Lilburn Trust NZSM Composers Competition. Full EP release forthcoming.

Phase Machine in use in Ableton Live

Phase Machine in use in Ableton Live

Lakes Over Clouds

I had two main goals for this composition. The first was to use a synthesiser I have been building in a complete work. The synthesiser uses modal synthesis, a variant of physical modelling that gives the composer precise control over the harmonics of the notes played. My second goal was to work collaboratively and with a performer in a way that I usually don’t. By writing a graphical score and leaving things up to the interpretation of the performer I let go of the precise control afforded by the tools of electronic music, my main medium.

Premiered at Sounds of Te Kōkī May 2024, performed by Estella Wallace, recorded by Joel Jayson Chuah & myself, audio and video production by Kassandra Wang.

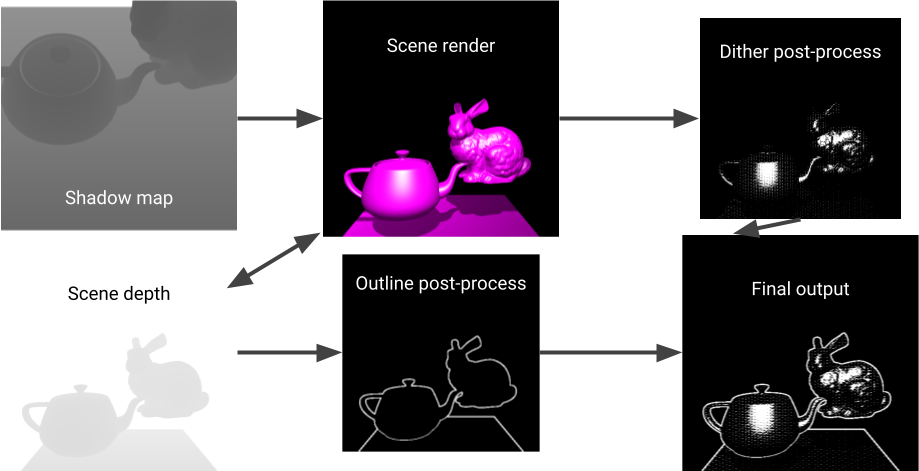

Infinite Driver graphics demo

As part of my computer graphics studies, my team implemented a real-time rendered physical simulation, showcased through a driving simulator. The simulation has following components: procedurally generated environments, physically based vehicle animations, and novel post-processing of the framebuffer output, achieving a unique non-photorealistic visual aesthetic.

My role in the project was to build a rendering pipeline and to implement a non-photorealistic style. The core component of this is a post-processing method of dithering an image (in the style of 1-bit computer displays) optimised for real-time 3D interactive use, using a spherically-mapped dither pattern to avoid flickering artefacts when the camera rotates. This technique was developed for the 2018 game Return of the Obra Dinn. I then extended this to create new visual effects: a 1-bit-per-channel RGB colour mode, a halftone dots comic/print dither pattern, and some experimental dither patterns created by hand.

I also implemented several other rendering algorithms: Blinn Phong shading, shadow mapping, and outline shading. In addition I developed C++ classes and functions for framebuffers and shader uniforms, extended and used Phuwasate’s OpenGL/C++ interface functions which ensure OpenGL objects are always properly created, destroyed, bound, and unbound, and integrated the rendering pipeline into the component-based scene graph.

Team members: myself (Rendering), Anfri Hayward (City and terrain generation), Phuwasate Lutchanont (Scene graph, physics library integration)

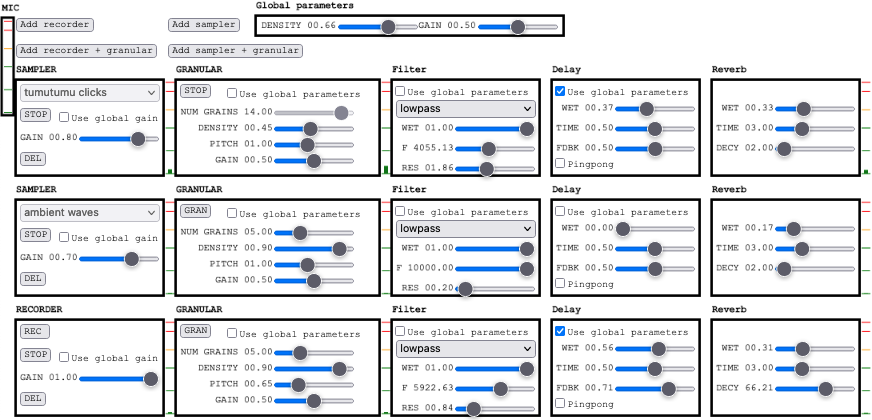

Live Performance Tool

This is a live performance tool for looping and processing sampled and live-recorded loops and field recordings using granular synthesis. I built it as the technological component for my final performance in the taonga puoro course taught by Rob Thorne, so I could design the project around what I needed for that performance and for the way I play taonga puoro — in particular a focus on improvisation, continuous or flowing gestures rather than quantised or “grid-based” ones, and a focus on supporting or extending the natural timbre of the puoro rather than distorting or synthesising timbre.

This was built using p5.js, and features audio recording and processing (in part using the granular-js library) and a hierarchical UI system that allows for signal chains to be added and removed.

Click screenshot to open, source code on GitHub.

Seer’s Gate (group project)

This was a group project, with a team of one design student and five computer science students. The aim of the project was to create a game in Unreal Engine 5 and to work as a team and explore and develop strategies for working as a team and working remotely/asynchronously. My main work was on the character controller, including character movement, attacking, and health; animation playback; and integration with other game systems.

Full credits in the video description.

Music for picture

These are assignments from a course on film composition taught by David Long. The course focused on using and combining sampled, recorded, and synthesised instruments in scoring, developing creative skills and techniques around composing for picture, and the process of scoring for screen both musically and practically.

Spatial Composing

As part of CMPO 310 ‘Electronic Music, Sound Design and Spatial Audio’ I have composed several works for spatial audio. I have composed for several different speaker setups, including for speaker hemispheres, the IKO 20-sided loudspeaker, and combinations of these, using the ambisonics surround format. I have used electronic music and modular synthesis methods to create these compsitions.

Visual simulation in Houdini

I have completed a course in Technical Effects and Simulation, where I used Houdini to create simulations for visual effects sequences. I designed and created several visual effects sequences, and used Houdini’s particle and physics solvers and its VEX scripting capabilities.

Audio (VST) Programming

I have been teaching myself lower-level audio programming to further combine my sonic arts and computer graphics practices. I have been doing this with C++ and the JUCE framework to create VST/AU/etc audio plugins. I created the Delrus plugin, which applies a chorus effect to the feedback path of a delay, to practice creating a whole plugin start to finish, and to implement all the features essentially every plugin will need: audio processing, DAW-accessable and saveable parameters, UI controls and layout, UI theme, JUCE modules, and building with CMake and the Projucer.

ECOSYSTEM

ECOSYSTEM is a generative ambient piece built around a visual environment of simulated entities. Each entity, mimicking a fish or a bird, moves with the entities around it, creating flocks and swarms. The overall movement and energy of the environment controls the movement of the synthesis, creating a flowing soundscape connected with the visual elements.

The entities are controlled by the Boids algorithm, and ECOSYSTEM was an opportunity to use techniques from computer graphics in sonic arts.

Premiered at Sounds of Te Kōkī May 2022, created in Max/MSP, filmed by SOUNZ.