Crispin Hitchings‑Anstice

Contents:Interference Patterns and Phasing Sequencer

This piece draws from concepts of minimal music, particularly ‘phasing’ as used by Steve Reich and other artists. A short phrase is played against a copy of itself, off set and shifting in time, revealing different interactions between the two phrases — a musical moiré effect. In creating this I studied many works that use phasing, examining how they are structured. I use this research to create a sequencer in the Max programming environment that performs the phasing, and this sequencer is the centre of the live electronics that realise the piece. I composed this piece as part of my Honours study, supervised by Mo Zareei. Finalist in the 2024 Lilburn Trust NZSM Composers Competition. Full EP release forthcoming.

Phase Machine in use in Ableton Live

Phase Machine in use in Ableton Live

Modal Synthesiser

| home | code | download | presentation pptx pdf | discuss |

As part of my Honours study I developed a synthesiser plugin using modal synthesis, a physical modelling technique using resonators tuned to a harmonic series. The design of the synthesiser draws from techniques used in the spectralism compositional movement. I developed the synthesiser using C++ and the the Juce framework as a VST/AU plugin.

I presented this synth at the Composers Association of New Zealand Conference 2025.

To test and demonstrate the synthesiser I have composed three études that demonstrate its features and use it in a full music production environment.

Lakes Over Clouds

I had two main goals for this composition. The first was to use a synthesiser I have been building in a complete work. The synthesiser uses modal synthesis, a variant of physical modelling that gives the composer precise control over the harmonics of the notes played. My second goal was to work collaboratively and with a performer in a way that I usually don’t. By writing a graphical score and leaving things up to the interpretation of the performer I let go of the precise control afforded by the tools of electronic music, my main medium.

Premiered at Sounds of Te Kōkī May 2024, performed by Estella Wallace, recorded by Joel Jayson Chuah & myself, audio and video production by Kassandra Wang.

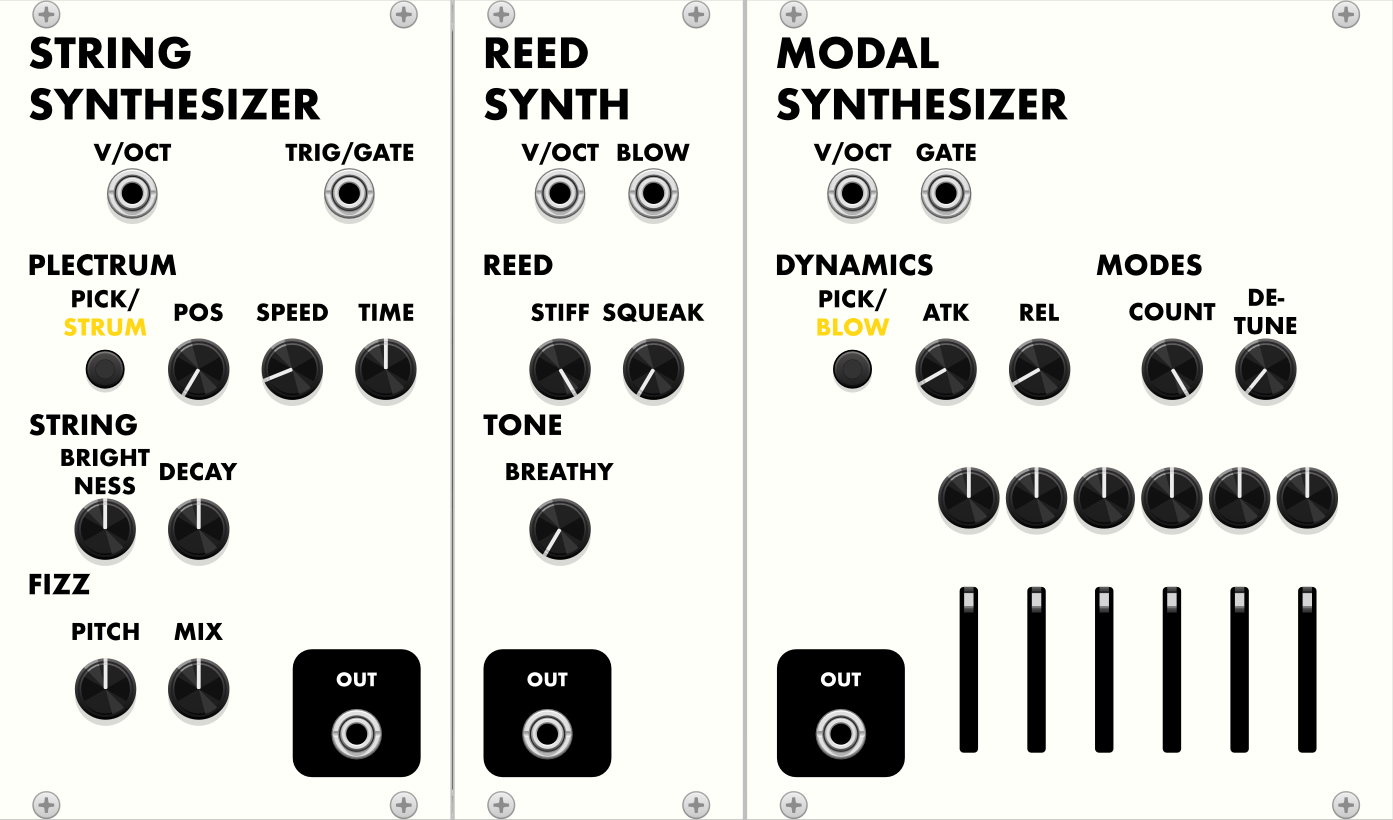

Physical Modelling DSP plugin for VCV Rack

As part of my Honours study I created three instruments using physical modelling techniques for the VCV Rack virtual modular platform. The three instruments showcase different DSP techniques: waveguides and Karplus-Strong string synthesis, modal synthesis, and reed modelling. I programmed the instruments in C++ and have developed a C++ library of DSP classes. I have created a user interface for each instrument which distils the underlying DSP parameters into a smaller set which is musically interesting and does not require the composer or performer using the instrument to understand the technical construction of the instrument.

Spatial Composing

As part of CMPO 310 ‘Electronic Music, Sound Design and Spatial Audio’ I have composed several works for spatial audio. I have composed for several different speaker setups, including for speaker hemispheres, the IKO 20-sided loudspeaker, and combinations of these, using the ambisonics surround format. I have used electronic music and modular synthesis methods to create these compsitions.

Music for picture

These are assignments from a course on film composition taught by David Long. The course focused on using and combining sampled, recorded, and synthesised instruments in scoring, developing creative skills and techniques around composing for picture, and the process of scoring for screen both musically and practically.

Audio (VST) Programming

I have been teaching myself lower-level audio programming to further combine my sonic arts and computer graphics practices. I have been doing this with C++ and the JUCE framework to create VST/AU/etc audio plugins. I created the Delrus plugin, which applies a chorus effect to the feedback path of a delay, to practice creating a whole plugin start to finish, and to implement all the features essentially every plugin will need: audio processing, DAW-accessable and saveable parameters, UI controls and layout, UI theme, JUCE modules, and building with CMake and the Projucer.

Live Performance Tool

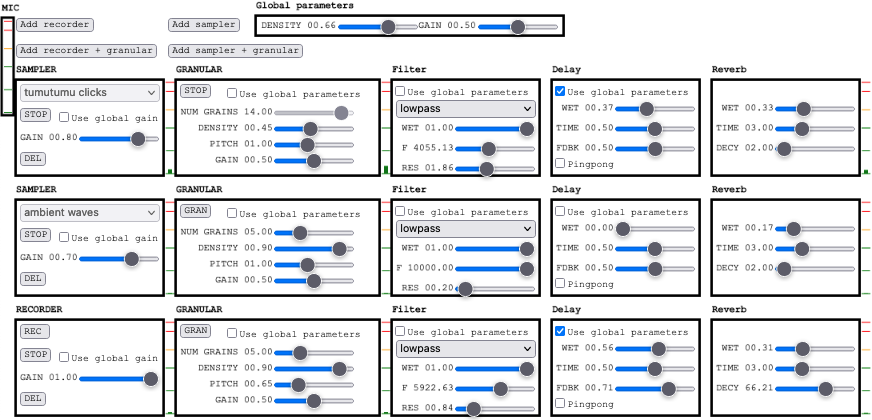

This is a live performance tool for looping and processing sampled and live-recorded loops and field recordings using granular synthesis. I built it as the technological component for my final performance in the taonga puoro course taught by Rob Thorne, so I could design the project around what I needed for that performance and for the way I play taonga puoro — in particular a focus on improvisation, continuous or flowing gestures rather than quantised or “grid-based” ones, and a focus on supporting or extending the natural timbre of the puoro rather than distorting or synthesising timbre.

This was built using p5.js, and features audio recording and processing (in part using the granular-js library) and a hierarchical UI system that allows for signal chains to be added and removed.

Click screenshot to open, source code on GitHub.

ECOSYSTEM

ECOSYSTEM is a generative ambient piece built around a visual environment of simulated entities. Each entity, mimicking a fish or a bird, moves with the entities around it, creating flocks and swarms. The overall movement and energy of the environment controls the movement of the synthesis, creating a flowing soundscape connected with the visual elements.

The entities are controlled by the Boids algorithm, and ECOSYSTEM was an opportunity to use techniques from computer graphics in sonic arts.

Premiered at Sounds of Te Kōkī May 2022, created in Max/MSP, filmed by SOUNZ.

Uncommon Orbit

Uncommon Orbit is an interactive audio-visual composition that combines Brian Eno-style ambient “tape” loops and glitch-type asthetics, working with musical structures like flowing vs rhythmic/pulsed and continuous vs random. Built with p5.js.

Click to begin, hold mouse button to change modes, mouse position changes parameters.

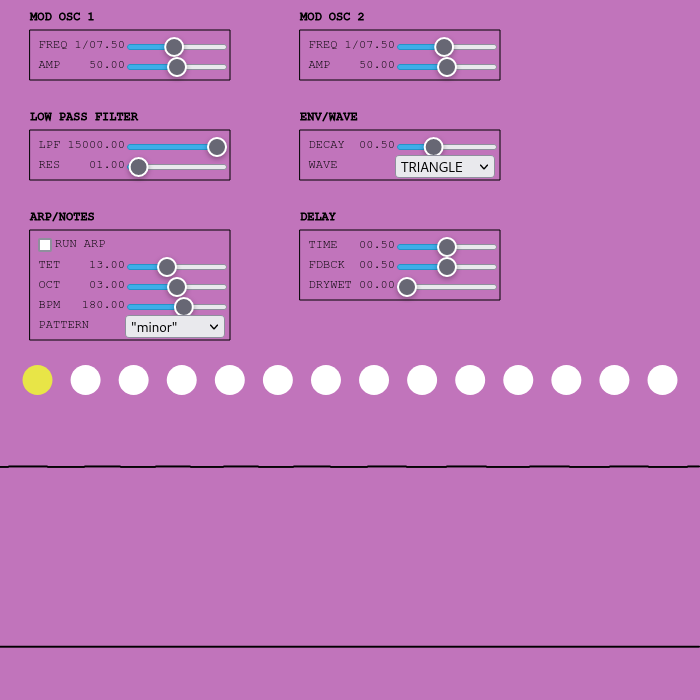

Changeable tuning FM synthesizer

This synthesiser/sequencer features a FM synthesiser with changeable parameters, and an arpeggiator with changeable tuning system between X-tone equal temperaments. Built with p5.js.

Click to open.

“Wonderful Granular Synth Thing”

A granular looper/synthesiser that records a phrase played by the user and loops that phrase or granulates it with adjustable parameters. Built with p5.js, uses the granular-js library by Philipp Fromme (MIT license), prepared piano sample recorded by me.

Click to begin, use keyboard to play sample (octaves ‘Z’-‘,’ ‘Q’-‘I’, piano layout).